RefVision: CV-Assisted Judging for Powerlifting.

RefVision is my computer-vision-assisted system designed to provide real-time video analysis for powerlifting meets. By leveraging serverless AWS components, pose estimation, and LLM-generated explanations, RefVision delivers quick, objective feedback to judges and lifters. Here’s how it came about, how it works, and why it matters.

Problem Statement

Imagine training for years, hitting the best squat of your life… only for 3 judges to tell you ‘No Lift’ – a decision you disagree with, and no way to appeal What if that call cost you $20,000? This happened a few months ago at the ZeroW Pro Its common source of tension in powerlifting, and it’s a problem that I think we can solve with technology.

Problem Statement

Contentious Calls: Disagreements on squat depth or other reasons for failing a lift.

No Appeals Mechanism: Lifters have no mechanism to challenge a decision.

Rising Stakes: large and growing prize money and records magnify the importance of disputes.

Sport Integrity: Unresolved and unexplained controversies diminish trust and viewer engagement.

Engagement: experience from other sports show that the strategic introduction of VAR improves viewer engagement.

Do you think this was a good lift?

The judges decided that it was high, and therefore no lift. That decision cost this lifter first place, and $20,000 in prize money. She and her coach strongly debated the decision, but there was no recourse. This seems both unfair and a good opportunity to do things better, and create a competitive advantage over other federations. This was the inspiration for the RefVision project.

Key Concepts

Supplement, Not Replace: Works with referees, providing an objective second opinion.

Appeal Mechanism: One VAR appeal per meet encourages strategic usage without disrupting flow.

Impartial Analysis: Pose estimation + classification provide a quantitative and verifiable mechanism to judge “Good” vs. “No Lift.”

Engagement: Live overlays and natural-language explanations for the audience to understand the reasons for a decision.

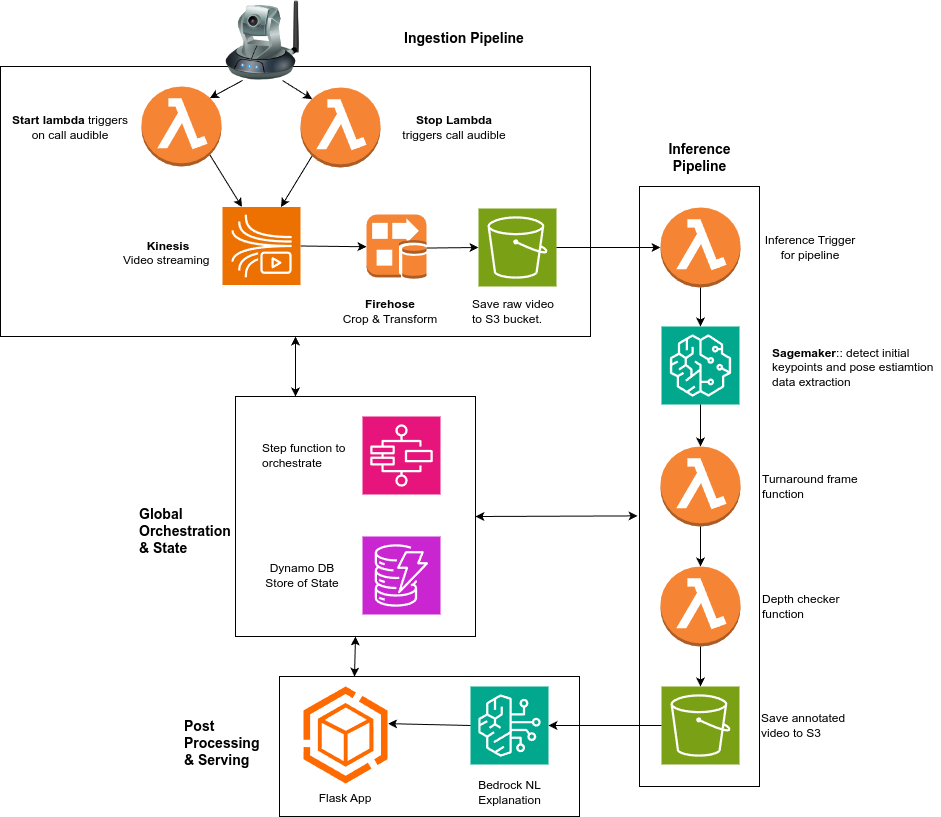

Architecture

Flow explanation

Live Capture: Camera → Kinesis → S3

Preprocessing: Lambda triggered by DynamoDB

Step Functions: Coordinates inference steps

Inference: Pose detection via SageMaker

Explanation: AWS Bedrock → DynamoDB

Annotated Video: S3 for final media

Web App: Displays decisions, explanations

Here’s an example of the output

The skeleton overlay clearly shows the movement throughout the squat. Its visually clear that the lifter has hit depth and its a good lift. To make it even more clear, the JSON output shows the numbers that support this:

{"decision": "Good Lift!", "turnaround_frame": 473, "keypoints": {"left hip y": 800.6494140625, "right hip y": 796.8759765625, "left knee y": 749.3765869140625, "right knee y": 739.2586669921875, "average hip y": 798.7626953125, "average knee y": 744.317626953125, "best delta": 54.445068359375}}

Natural Language Explanation

Of course, the JSON doesn’t mean very much to the average person, so we use a language model to translate it to natural language making it accessible:

“At the bottom of this squat (frame 473), the average hip position was 798.76, and the average knee position was 744.32. The difference between the hips and knees (54.45), which exceeds the required threshold for depth, so this attempt was judged a Good Lift!”